Events

The Data City Blog

Latest news and views

We’re committed to a culture of working out loud. Sharing our ideas and work as early as we can, and getting feedback from our community.

Find thought-pieces, research papers, product updates, company news and much more on The Data City blog.

You can also access and download a number of our published insights in from our reports page.

Filter by category

Search for an article

Immersive Technologies

Immersive Technology’s Mental Health Innovators

Product

Data Explorer Release Notes

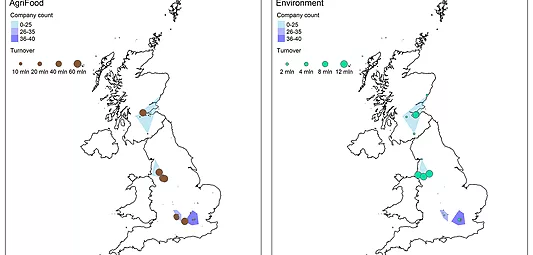

Engineering Biology

Non-medical applications of Engineering Biology: clusters and learnings

Working out loud

Identifying outliers

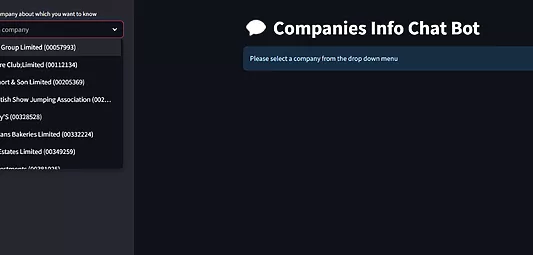

Working out loud

Experiments with LLMs

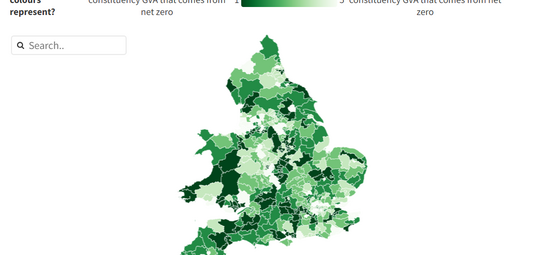

Net Zero & Reports